Introduction

The integration of artificial intelligence into enterprise operations promises significant benefits, yet the path to successful implementation remains challenging. According to Harvard Business Review, approximately 80% of AI projects fail to deliver their intended outcomes. This concerning statistic highlights the need for a structured approach to AI adoption, particularly in Quality Engineering where precision and reliability are paramount. As noted by Harvard,

"AI is great at identifying patterns and providing predictions for well-formulated problems, but it fails to practice emotional intelligence and exercise moral or ethical judgment."

Understanding AI Project Failures

The high failure rate of AI initiatives can be attributed to several key factors:

- Misaligned priorities: Organisations often prioritise technological sophistication over addressing specific business challenges.

- Vendor-dependent technology stacks: Relying heavily on vendor-specific solutions limits flexibility and creates dependency.

- Strategic fragmentation: Many organisations pursue isolated AI use cases without an overarching strategy for productisation.

- Unrealistic expectations: There is a common misconception that AI solutions are plug-and-play, particularly in enterprise environments where significant engineering effort is required for successful implementation.

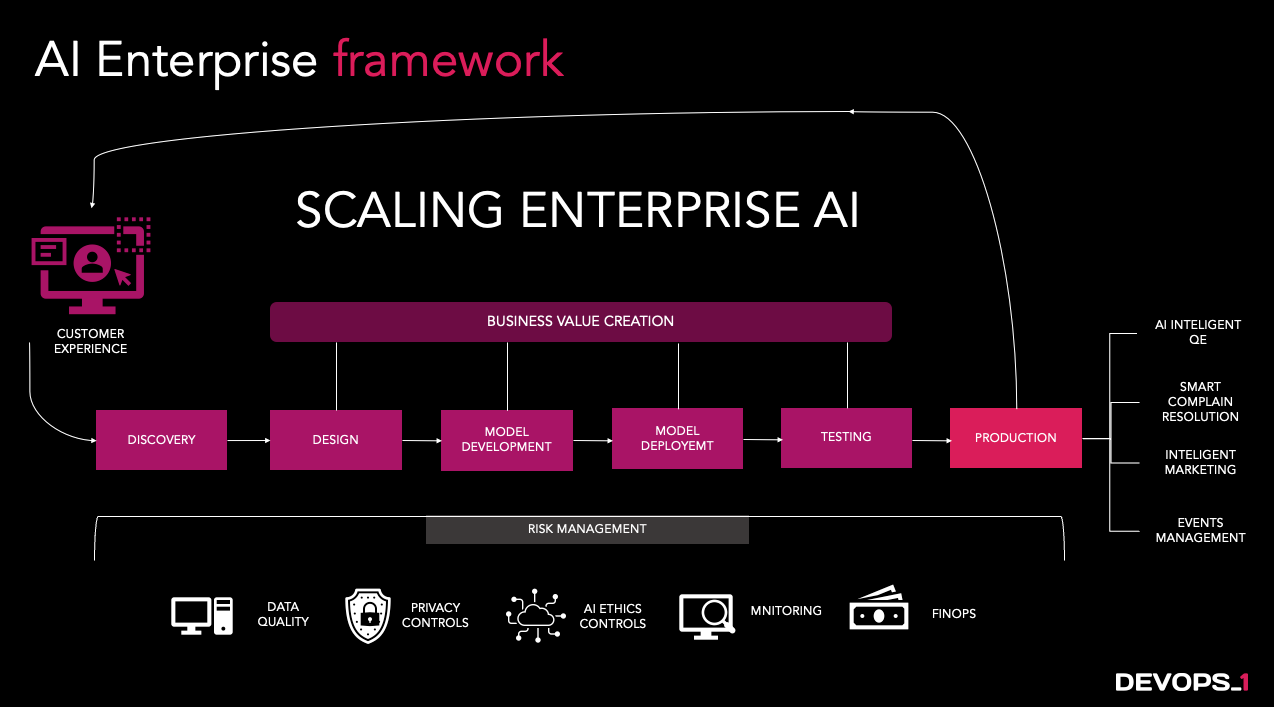

The DevOps1 AI Framework for Risk Mitigation

The DevOps1 AI approach provides a structured methodology to overcome these challenges by:

- Maintaining vendor neutrality: Avoiding excessive reliance on specific AI vendors preserves flexibility and reduces risk.

- Prioritising business outcomes: Focusing on addressing concrete business problems rather than implementing technology for its own sake.

- Investing in scalable infrastructure: Building systems capable of growing with organisational needs.

- Acknowledging limitations: Developing mitigation strategies and hybrid approaches that account for AI's current constraints.

A Strategic Implementation Process

Successful AI integration in Quality Engineering follows a comprehensive process:

- Business objective alignment: Clearly defining what success looks like from a business perspective.

- Current and future state analysis: Thoroughly assessing existing capabilities and establishing a vision for the future.

- Gap and risk identification: Identifying potential obstacles and planning mitigation strategies.

- Roadmap development: Creating a clear path forward with defined milestones.

- Success metric definition: Establishing quantifiable metrics to track progress.

- Governance implementation: Addressing compliance requirements, risk management, and ethical considerations.

AI_Augmented QE: A Comprehensive Quality Engineering Framework

Our framework provides Quality Engineering professionals with a structured approach to maintain and support all testing dimensions:

- Unit Testing

- Functional Testing

- Security Testing

- Performance Testing

- Production-related Quality Engineering

The framework utilises a generic template compatible with mainstream QE toolsets while building organisational knowledge about applications and processes. Its workflows can operate in parallel or sequential configurations based on requirements, enabling IT teams to implement autonomous quality engineering while focusing development resources on new features.

Distinctive Approach to Quality Engineering

Unlike standard AI service offerings that often focus on general AI implementation, AI_Augmented QE is specifically designed for quality engineering processes. This specialisation allows for:

- Testing-specific AI augmentation: Rather than applying general AI capabilities to testing, it integrates AI directly into established QE workflows, enhancing rather than replacing existing quality processes.

- Comprehensive test coverage: The framework addresses the entire testing spectrum from unit testing to production monitoring, ensuring no quality gaps remain uncovered.

- QE process evolution: it enables gradual evolution of quality practices rather than disruptive transformation, allowing teams to build on existing strengths while incorporating AI advantages.

- Autonomous testing equilibrium: The framework carefully balances automation with human expertise, creating semi-autonomous testing environments that maximise effectiveness without surrendering quality control.

Enterprise AI Implementation for Quality Engineering

Effective enterprise AI development requires creating a stack that securely supports organisational use cases. This AI infrastructure must:

- Integrate seamlessly with existing IT systems and end users

- Continuously improve as it addresses more diverse use cases

- When utilising generative AI, reason effectively with organisational knowledge bases and understand company-specific ontology and data

Knowledge Engineering: A Distinctive Advantage

Knowledge engineering serves as a critical differentiator in the framework's approach to AI implementation. Unlike typical AI services that focus primarily on model development, knowledge engineering:

- Creates a unified knowledge foundation: Establishes a comprehensive understanding of the organisation's quality practices, testing artefacts, and system behaviours.

- Enables contextual intelligence: AI systems can interpret testing results within the proper organisational context rather than making generic assessments.

- Preserves institutional knowledge: Critical quality engineering insights are systematically captured rather than remaining siloed in individual team members.

- Facilitates continuous learning: The knowledge base evolves through a structured feedback mechanism, ensuring the AI's understanding of quality requirements matures in parallel with the organisation.

- Supports cross-functional alignment: Establishes a common quality language across development, testing, and business stakeholders.

This knowledge engineering approach creates a secure, scalable hierarchical system that organises organisational knowledge artefacts, including:

- Process-specific knowledge

- Factual information

- Dynamic knowledge that evolves over time

- Knowledge from external sources

- Information gathered through user interactions

Risk Mitigation and Measurement: An Explicit Focus

Where many AI service providers emphasise capabilities with limited attention to limitations, our framework explicitly addresses risk through:

- Structured risk assessment: Systematic evaluation of AI implementation risks specifically within quality engineering processes.

- Hybrid testing approaches: Strategic combination of AI-driven and traditional testing methodologies to provide complementary safeguards.

- Quantifiable success metrics: Clear definition of measurable outcomes to evaluate AI effectiveness in quality improvement.

- Phased implementation model: Risk mitigation through incremental adoption, allowing for course correction before full-scale deployment.

- Continuous monitoring mechanisms: Ongoing assessment of AI performance against established quality benchmarks.

This explicit focus on risk management ensures that AI enhances rather than compromises quality assurance practices.

Vendor-Agnostic Approach: Ensuring Flexibility and Independence

Unlike service providers who may maintain preferred technology partnerships, vendor-agnostic approach provides several strategic advantages:

- Technology flexibility: Freedom to select the most appropriate AI technologies for specific quality engineering challenges without vendor constraints.

- Reduced dependency risk: Protection against vendor-specific limitations or business changes that could impact quality processes.

- Best-of-breed integration: Ability to combine complementary technologies from multiple providers to create optimal quality engineering solutions.

- Future-proof architecture: Framework designed to incorporate emerging AI capabilities regardless of their source, ensuring longevity and adaptability.

- Cost efficiency: Ability to negotiate and select solutions based on value rather than established vendor relationships.

This vendor-neutral stance ensures that quality engineering practices remain driven by organisational needs rather than technological limitations.

Implementation Roadmap

The implementation process follows a structured timeline:

Phase 1: Foundation and Proof of Value

- Master Class (6 hours): Training on generative AI frameworks for QE, knowledge engineering, and prompt engineering

- Scoping (1 week): Defining Phase 1 scope and establishing success metrics

- Platform Setup (2 weeks): Implementing the organisation

- Knowledge Engineering (2 weeks): Establishing knowledge artefacts and test data

- Validation (1 week): Sanity testing by DevOps1 engineers

- Proof of Value Execution (4 weeks): Gathering and analysing results against defined metrics

Phase 2: Scaling and Expansion

- Extending implementation to additional teams

- Broadening scope to include additional test types (mobile, security, performance, observability)

Conclusion

By adopting a strategic approach to AI integration in Quality Engineering, organisations can significantly improve their chances of success. The framework, combined with proper knowledge engineering and a focus on business outcomes, provides a robust foundation for AI-augmented quality processes.

What distinguishes our framework from standard AI solutions is its comprehensive approach to quality engineering, sophisticated knowledge management, explicit risk mitigation strategy, and vendor-agnostic flexibility. These distinctive characteristics address the fundamental reasons behind AI project failures, enabling organisations to realise the full potential of AI in enhancing quality assurance while avoiding common implementation pitfalls.

This approach not only mitigates the risks associated with AI implementations but also enables organisations to develop sustainable, evolving quality practices that deliver measurable business value.